This article is more than 1 year old

VMware kills vRAM memory tax with vSphere 5.1 server virt

Stretching VMs, and taking on Microsoft

VMworld 2012 The most important new feature of the new ESXi 5.1 hypervisor and its related vSphere 5.1 tools that made their debut at the VMworld virtualization extravaganza today is not a feed or speed, but the fact that VMware has dropped the much-hated vRAM memory tax that came out last year with vSphere 5.0.

With the vRAM memory tax, which debuted with vSphere 5.0, each edition of the vSphere tool had a virtual memory cap for each license, and if you needed more virtual memory for your ESXi host, you had to buy enough licenses to cover the total virtual memory in use by your VMs. This caused much gnashing of teeth among VMware's customers, and a few weeks later VMware backpeddled a bit and boosted the memory caps by between 33 and 50 per cent, but customers remained annoyed by the whole idea.

In a keynote announcing the new server virtualization tools, incoming CEO Pat Gelsinger said that VMware was "striking that word from the vocabulary" at VMware, referring to vRAM, and added that the new tools would all be priced on a per-socket basis rather than on a mix of sockets, virtual memory used, and VMs under management.

And Paul Maritz, the outgoing CEO who approved the vRAM pricing scheme, was even more blunt when asked if vRAM pricing was a mistake. "Yes, it is an an admission that we made things only more complex, and we are rectifying that," Maritz said with his usual calm bluntness. "So, mea culpa."

It is also an admission that VMware's desire to squeeze more money from customers from the basic hypervisor and management tool stack is not going to work, with customers resisting and Microsoft and Red Hat competing more aggressively to try to unseat VMware as the hypervisor of choice in the data center.

ESXi 5.1, a slightly more monstrous hypervisor

The ESXi 5.1 hypervisor supports new hardware, both the physical and the virtual kind. On the physical side, ESXi 5.1 can run atop the latest processors from Intel and Advanced Micro Devices, including processors that are not even here yet. Specifically, the hypervisor can run on "Sandy Bridge" Xeon E5 and "Ivy Bridge" Xeon E3 processors as well as other future members of the Ivy Bridge family of chips.

The hypervisor has also been tuned to run on future Opteron 3300, 4300, and 6300 processors based on the "Piledriver" cores from AMD, which are expected to start coming to market in late 2012 and into early 2013 in a staggered release.

On the virtual hardware size, deep within the ESXi hypervisor is an abstracted version of an idealized x86 server that virtual machines run atop and that abstracts the underlying iron. This is called the virtual machine hardware by VMware, and ESXi 5.1 supports what is called Version 9 of this abstracted hardware.

Among other things, the latest Version 9 virtual hardware supports Intel's VT-x with Extended Page Tables virtualization assistance features for the CPUs as well as the AMD-V with Rapid Virtualization Indexing (RVI), or nested page tables as this is sometimes called. Both VT-x/EPT and AMD-V/RVI features help reduce some of the overhead that the hypervisor and virtual machine guest operating systems impose on the physical processors.

The important thing about the virtual hardware abstraction layers is that unlike earlier releases of ESX Server, you don't have to stop and reload a running VM to update it to the new hardware version if you don't want to. ESX Server 3.5 and 4.0 supported Version 4 of this virty hardware abstraction, and ESX Server and ESXi 4.1 supported Version 7 while ESXi 5.0 supported Version 8.

But in the wake of the announcement of ESXi 5.0 last year, customers explained to VMware that they did not want to shut down, update, and restart running VMs to upgrade them to the new virty hardware (and thereby get some of the latest features of the VM and hypervisor); rather, they just want to be able to upgrade the hypervisor underneath these running VMs and leave them the heck alone.

And thus, now VMware is doing the right thing and supporting older VM virty hardware levels with the latest hypervisor. So in plain English, any VM that was generated on VMware ESX Server 3.5 or later can run atop ESXi 5.1 unchanged.

The ESXi 5.1 hypervisor also supports Microsoft's forthcoming Windows 8 desktop operating system, due later this year, and its big brother Windows Server 2012 variant, due next month. Technically, the new VMware hypervisor also supports Apple's Mac OS 10.5, 10.6, and 10.7 operating systems, but Apple would probably be very unhappy with you if you hacked Mac OS images onto Intel-based servers.

VMware ESXi VM stats, 5.0 versus 5.1

With ESXi 5.0 last year, the hypervisor itself was able to span server with up to 160 cores and 2TB of main memory. That was the upper limit of hardware based on Intel's ten-core Xeon E7 processors if you built a 16-socket machine. That's the same core count that the hypervisor spanned with vSphere 4.1 two years ago, but that hypervisor could only see 1TB of main memory.

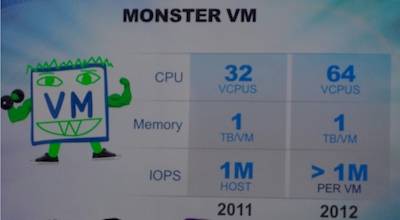

An individual virtual machine running on the ESXi 5.0 hypervisor could address up to 1TB of virtual memory and could span up to 32 virtual CPUs. A virtual CPU is a core when you are talking about an Opteron processor and a thread if you have HyperThreading turned on inside a Xeon processor.

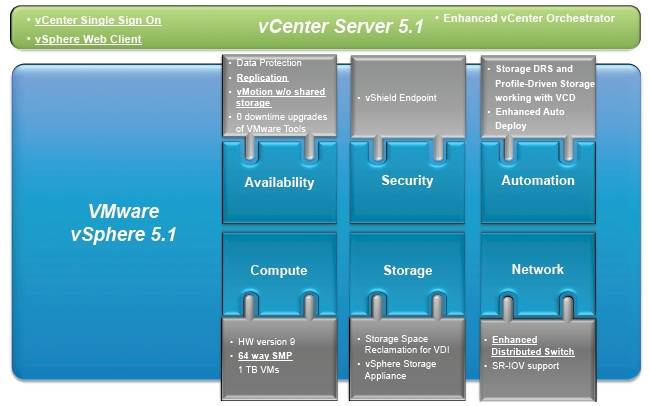

Block diagram of the vSphere 5.1 server virtualization stack

It was not clear at press time what the configuration maximums of the ESXi 5.1 hypervisor itself were in terms of the number of cores it can span or the amount of physical memory it can address. The VMs running atop the new hypervisor have exactly the same 1TB of virtual memory limit, but now you can let a single VM span up to 64 virtual CPUs.

A four-socket machine based on the new Xeon E5-4600 processor has eight cores per socket and two threads per core for a total of 64 threads, which means you can make a VM that spans the entire machine. And a four-socket server based on the 16-core Opteron 6200 processors maxxes out at 64 threads in the box.

So to one way of thinking, there is no need to make the hypervisor or its VMs any larger. And with Intel not in any hurry to update the Xeon E7s with a Sandy Bridge or Ivy Bridge variant, there is not much need to make either the hypervisor or the VM span more cores or memory.

Except for one thing: Microsoft and its desire to supplant VMware's vSphere wares with its Hyper-V 3.0 and Systems Center double-whammy. With the forthcoming Hyper-V 3.0 hypervisor, Microsoft will span 320 cores (or threads if you turn on HyperThreading on the Xeon chips) and up to 4TB of main memory on the host system.

The VM sizing is the same on Hyper-V 2012 as on ESXi 5.1, with up to 64 virtual CPUs and up to 1TB of virtual memory allocated to a big fat VM. You would think that VMware would want to meet or beat Microsoft on every spec possible to protect its substantial lead in server virtualization in the data center.

Steve Herrod, CTO at VMware, said in the keynote address that VMware had done a lot of work boosting the I/O performance into and out of virtual machines with ESXi 5.1, raising it to over 1 million I/O operations per second coming out of a single VM compared to 1 million IOPS coming out of an entire host with ESXi 5.0 last year. This is a big change, and on par with the 985,000 IOPS that Microsoft was talking about Hyper-V 3.0 being able to do when it was previewed at TechEd back in June.

As for his former employer (meaning Microsoft), Maritz was not impressed with Hyper-V 3.0.

"This is not a new strategy on Microsoft's part," explained Maritz during a press conference after the keynote. "Their strategy for the past seven years has been that their product is good enough, and this is the third time we have heard it."

"The reality is that people's expectations are rapidly maturing. Hypervisors are free - everybody is giving them away. At the moment that Microsoft is announcing that they are good enough for server virtualization, the game has changed and we are announcing data center virtualization."

It's not always about feeds and speeds in the physical or virtual hardware worlds, and there are plenty of other improvements in the ESX hypervisor and the related vCenter Server management console and vSphere extensions to the hypervisor.

ESXi 5.1 has a new-and-improved CPU virtualization technique, which has been given the name virtualized hardware virtualization or VHV for short, that gives guest operating systems running inside of the VM "near native access to the physical CPU."

ESXi 5.1 also allows for CPU counter and hardware-assisted virtualization information to be exposed to guest operating systems to help programmers better debug and tune applications that will be running atop VMs.

Another important feature of the ESXi 5.1 hypervisor is its ability to perform a virtual machine live migration between two distinct physical servers running hypervisors without the need of those physical machines being attached to the same storage area network.

The SAN requirement has been in effect since VMotion live migration debuted years back with ESX Server, but now VMware is lockstepping VMotion for VMs and VMotion for storage (which moves files between storage arrays in much the same fashion) so that the memory state and files describing a running VM can be teleported between servers using their own locally attached storage. It will be interesting to see what kind of performance overhead this non-SAN live migration has.

And finally, vSphere 5.1 includes a new Web-based client that you can use instead of the Windows-based console that or the Linux-based virtualized console that debuted last year with vSphere 5.0. This new web console snaps into vCloud Director, the cloudy orchestration tool sold by VMware.

Still not cheap, but priced for value

The vSphere 5.1 stack comes in six editions, including a new Standard Edition with Operations Manager bundled in with per-socket pricing and the existing Essentials, Essentials Plus, Standard, Enterprise, and Enterprise Plus versions.

Each edition has an increasing amount of functionality. vSphere Essentials costs $495 and is licensed to run across three physical hosts with a maximum of two processors each. Essentials Plus adds in the vSphere Storage Appliance, a virtual SAN that runs on servers, and costs $4,495 across those three machines.

The Standard Edition costs $995 per socket, while tossing in vCenter Operations Manager boosts that price to $1,995 per socket. The Enterprise Edition will run you $2,875 per socket, while the top-end Enterprise Plus runs $3,495. El Reg will do a more thorough analysis of features and pricing for ESXi and vSphere 5.1 in a future story.

vSphere 5.1 will be available on September 11. ®