This article is more than 1 year old

Intel plugs both your sockets with 'Jaketown' Xeon E5-2600s

Oof! Chipzilla unzips double bulge and gets its stuff out

The Xeon E5-2600, formerly known by the code-name "Jaketown" inside of Intel and "Sandy Bridge-EP" when Intel referred to it externally, is finally here for mainstream, two-socket servers.

And now the 2012 server cycle begins in earnest – even if it wasn't soon enough for the most ardent data-center motorheads.

The Xeon E5-2600 is one of a family of server chips based on the Sandy Bridge architecture that Intel has either put out in 2011 or will put out in 2012. The chips are based on a similar core design employed in the current 2nd Generation Core processors for laptops and desktops, and the Xeon E3-1200 processors for single-socket servers and workstations, which came out early last year.

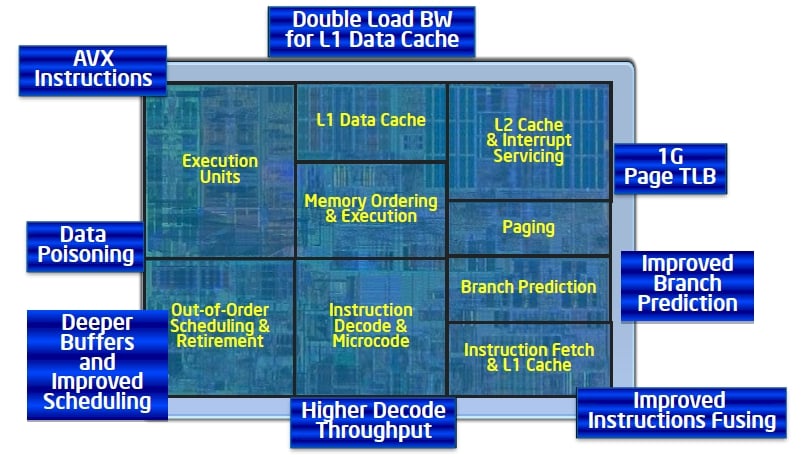

Among the many new features in the Sandy Bridge architecture that are shared across all processors are support for Advanced Vector Extension floating point math and a revamped Turbo Boost 2.0 overclocking mechanism that is more efficient and flexible than the prior implementation.

The Xeon E5-2600 package

All Sandy Bridge processors are manufactured in Intel's 32-nanometer wafer-baking processes, not the 22nm "Tri-Gate" process that Intel will fire up later this year for the "Ivy Bridge" family of PC processors.

Intel generally does not use a new process first on its volume server chips, so eventually there will be Ivy Bridge variants of Xeons that get the 22nm shrink. But that is not today.

Intel was widely expected to put the Xeon E5-2600 processors into the field in full volume last fall, but instead it put the chips out in limited volume (and under non-disclosure) to selected HPC and hyper scale data-center customers who could not wait until today's launch to get to Jaketown.

The Xeon E5-2600 is not just a Xeon E3-1200 with some two-way SMP glue on it make it work pretty with the "Patsburg" C600 chipset that's part of the server platform that Intel calls "Romley." There's a lot more to it than that.

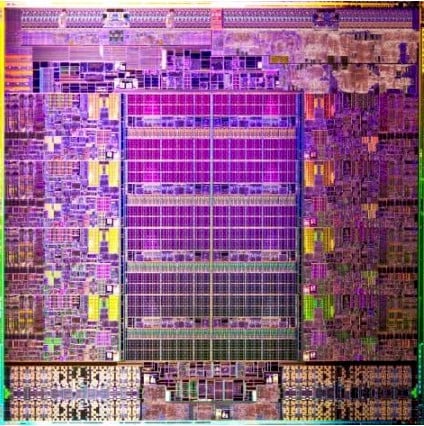

Intel briefed El Reg about all the technical goodies in the Xeon E5-2600 processors ahead of the launch, and walked us through the details of the chip, which weighs in at 2,263,000,000 transistors on a silicon wafer that has 416 square millimeters of area. The Xeon- E5-2600 packs a lot of functionality into those transistors.

Let's start with the cores.

Each core on the Xeon E5-2600 chip has a completely revamped branch predictor hanging off its 32KB instruction cache, and the entire "front end" of the chip – the L1 instruction cache, predecode unit, instruction queue, decoder unit, and out-of-order execution unit – has been designed to sustain a higher level of micro-ops bandwidth and use less power by turning off elements of the front end when it can use micro-ops caches added to the chip.

The core has 32KB of L1 data cache and 256KB of L2 (or mid-level, as Intel sometimes calls it) cache memory. It has two load units, which can do two 128-bit loads per cycle, and a store unit.

Die shot of the Xeon E5-2600

The AVX unit on the cores can do two floating point operations per cycle – twice what the current Xeon 5600s can do – and it moved from 128-bit to 256-bit processing, as well. This is a huge jump, and one that matches what AMD can do with a "Bulldozer" core with half the cores turned off and the scheduler running 256-bit floating-point instructions through half the cores.

The Xeon E5-2600 is designed to have as many as eight cores, and like last year's "Westmere-EX" Xeon E7 processor for high-end four-socket and eight-socket servers and the impending "Poulson" Itanium processor expected this year, the Jaketown chip features a "cores out" design and a ring interconnecting the shared L3 caches and those cores so they can share data.

Each core has a 2.5MB segment of L3 cache loosely associated with its core, but these are glued together by the ring into a 20MB shared cache, which Intel often calls the last-level cache.

Obviously, Intel searches through its chip bins and finds parts in which all of the components on the chip are working, and makes other parts with fewer cores and smaller caches as a means of improving its yields and fleshing out its Xeon E5-2600 line with different performance and price points.

As with the past Xeon and Itanium chips, the Xeon E5-2600 has QuickPath Interconnect (QPI) links coming off the chip to do point-to-point communications between the processors. The QPI link agent, cores, L3 cache segments, DDR3 memory controller, and an "I/O utility box" all have stops on this ring bus. The utility box includes a Direct Media Interface, PCI-Express, and VT-d I/O virtualization as a unit, sitting on this ring bus at the same stop.

Jeff Gilbert, the chief architect of the Xeon E5-2600 processor, told El Reg that this bidirectional, full-ring interconnect has more than a terabyte per second of bandwidth coming off and going onto the ring. Those two QPI 1.1 agents that cross-couple the two Xeon E5-2600 processors share an aggregate of 70GB/sec of bandwidth across those two links. The QPI links run at 6.4GT/sec, 7.2GT/sec, or 8GT/sec, depending on the model of the chip.

One big change with the Xeon E5-2600 chips is the integration of PCI-Express 3.0 controllers into the I/O subsystem right there on the die. The PCI Express 3.0 controller on the chip implements 40 lanes of I/O traffic, which is sliced and diced in various ways in conjunction with the Patsburg C600 chipset.

Each E5-2600 socket has four memory channels, up from three with the Xeon 5600s, and you can hang three DIMMs per channel for a total of a dozen per socket. Intel is supporting unregistered and registered DDR3 memory sticks as well as the new load-reduced, or LRDIMM, memory for those who want to get the maximum memory capacity per socket, which stands at 384GB. Regular 1.5 volt as well as "low voltage" 1.35 volt memory are supported on the processors, and memory can run at speeds of 800MHz, 1.07GHz, 1.33GHz, or 1.6GHz.

The important thing about the Xeon-E5 and the Romley platform design, explains Ian Steiner, a processor architect at Intel's Beaverton, Oregon facility, is that the platform gets back to the core-to-memory bandwidth ratio from the "Nehalem" Xeon 5500 launched three years ago.

The design gets the cache "out of the way", and thanks to that high-bandwidth ring interconnect on the chip and a bunch of microarchitecture tweaks relating to the memory controller scheduler, and that extra memory channel, if you scale from one to two sockets on a Xeon E5 box, you can now get around double the memory bandwidth.

About 33 per cent of that improvement comes from the move from three to four memory channels, another 20 per cent comes from moving from 1.33GHz to 1.6GHz memory, and the remaining 40 per cent comes from the ring and microarchitecture changes.

Based on internal benchmark tests done by Intel, a two-socket Xeon 5600 box with two processors basically started choking at 40GB/sec with 1.33GHz memory. But you can push a two-socket Xeon E5-2600 box to more than 60GB/sec using 1.07GHz memory and as high as 90GB/sec using 1.6GHz memory.