This article is more than 1 year old

Intel puts x64 in a parallel universe

Taking the MIC out of Larrabee

We've all been wondering exactly what Intel would do with various multicore x64 processors that had been designed as co-processors to accelerate graphics and other applications with lots of number-crunching. The answer, as Intel explained at the International Super Computing conference in Hamburg, Germany this week, is simple: Replace lots of standard Xeon processors commonly used in massively parallel supercomputers with many-cored systems on a chip and drop the whole idea of doing discrete graphics cards to compete against Nvidia and Advanced Micro Devices.

At last fall's SC09 supercomputer trade show, Intel's chief technology officer, Justin Rattner, showed off an experimental graphics co-processor code-named "Larrabee," which was able to hit one teraflops running the SGEMM single precision, dense matrix multiply benchmark test when Intel turned on all the cores and then overclocked it for a short spike. But don't get too excited.

Only a few weeks later, Intel condemned Larrabee to the purgatory of development status because it was late coming to market. And last week, knowing that everyone would be asking questions about Larrabee's kickers at the ISC event in Hamburg, Intel's PR machine let it be known that it was bowing out of the discrete graphics processor market, but hinted that the substantial work it had done would be repurposed to make co-processors for HPC accelerating workloads running on x64 processors.

Kirk Skaugen, general manager of Intel's Data Center Group, had to work over the Memorial Day holiday, announcing something Intel is calling the Many Integrated Core architecture, or MIC for short, which Intel is billing as the "industry's first general purpose many core architecture." And rather than having MIC chips be the sole processors in a system, what Intel is going to do is plunk these chips into HPC systems as co-processors, just like Nvidia is doing with Tesla GPUs and Advanced Micro Devices is doing with Stream GPUs.

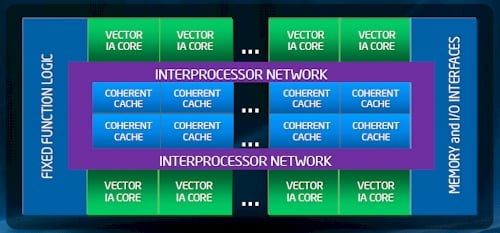

Here's what the MIC block diagram looks like, and if you remember what the vague statements were made about Larrabee, this sure looks like the same product:

The Many Integrated Core architecture block diagram

Like Larrabee, the MIC chips that Intel plans to bring to market will marry an x64 core and vector processors to some cache and then plunk down lots and lots of these units on a chip with a fast interconnect that creates keeps the caches for each chip coherent (so they can share data quickly and function more or less like a baby parallel supercomputer). The Larrabee design used a superscalar x64 core (without the out-of-order execution of Xeons, so akin to the Atom chip in some respects) and a 512-bit vector math unit that could do 16 floating point operations per clock doing single precision math. Larrabee also had a very wide ring bus for linking all of the cores and the caches together. As you can see from the MIC block diagram, the same elements are in the MIC architecture.

Skaugen said in his keynote at ISC that the MIC chip family would be known as Knights, and he held up what is presumably the same co-processor that Intel showed off at SC09 last fall, which he identified as the Knights Ferry co-processor:

The Intel Knights Ferry HPC co-processor.

This card, which looks exactly like a PCI-Express graphics card, is intended to be used as a software development platform. It has 32 cores, Skaugen divulged, running at 1.2 GHz, and it puts four execution threads on each core for a total of 128 threads. The chip has 8MB of shared coherent cache — that could be a mix of L1 and L2 cache; Skaugen didn't say — and the package comes with 1GB or 2GB of GDDR5 graphics memory for the cores to use as they crunch their numbers.

Yes, it is a graphics card. But it is just a graphics card that no one is ever going to write a graphics driver for — unless some smart asses in the open source community do. Which, by the way, will be possible when the commercial variants of the MIC chips become available. Intel could not stop this, even though it is not promoting MIC chips for use as discrete graphics chips.