This article is more than 1 year old

Oracle and HP's database machine predicated on Voltaire

Israeli firm says Infiniband is best of all possible I0s

Interview "Common sense is not so common" is a quotation attributed to Voltaire, the 18th century French philosopher, essayist, writer and wit. So, how sensible were HP and Oracle in basing their database machine around an IO fabric that has struggled to find favour beyond the HPC market?

The fabric in question is InfiniBand, and the switches come from Voltaire - the Israeli firm, rather than the philosopher. InfiniBand is rated at 20Gbit/s and Voltaire would like the idea that speed and daring is involved in Oracle and HP's decision to go with InfiniBand rather than 10GigE or Fibre Channel or a SAS fabric.

High-performance computing (HPC) applications use InfiniBand as a cluster or grid interconnect. Its bandwidth is so high, with 40Gbit/s possible, that its backers tout it as a data centre convergence fabric candidate with other protocols - Ethernet, Fibre Channel, whatever - running on top of it.

That's a theoretical possibility, but is it practicable? Can InfiniBand break out of its high-performance computing (HPC) niche into general data centre use? We asked Asaf Somekh, Voltaire's VP for strategic alliances some questions about the Dababase Machine's use of InfiniBand and what it might mean. Asaf's answers have not been edited at all.

El Reg: There are two grids in the Oracle Database Machine; one for the database servers and one for the Exadata storage servers. How is InfiniBand used within and between these grids?

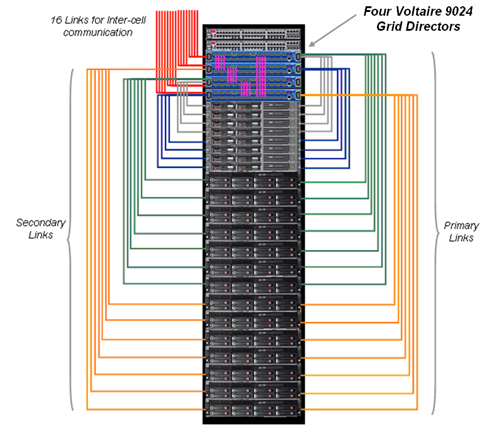

Asaf Somekh: The HP Oracle Database Machine is designed to run large, multi-terabyte data warehouses with 10x faster performance than conventional database solutions. The HP Oracle Database Machine uses 14 Oracle Exadata Servers along with 8 Oracle RAC 11g database servers connected using four Voltaire Grid Director 24-port InfiniBand switches. The four Voltaire switches form a unified fabric which is used both for server and storage communication (see the diagram below). Separate grids for servers and storage are not required – because of the high throughput that InfiniBand provides, the same InfiniBand wires are used for running both types of traffic over a single fabric.

InfiniBand fabric layout in Oracle Database Machine.

Not only does the Oracle HP Database Machine take advantage of InfiniBand’s 20 Gigabit bandwidth and low latency, it also uses Reliable Datagram Sockets (RDS) and Remote Direct Memory Access (RDMA) – which enables zero loss, zero copy -- in order to deliver extreme I/O performance.